AI gave us oil and we strapped it to a horse

Right now UX is the bottleneck for AI productivity not the models.

We were all riding horses three years ago when Open AI handed each of us a bucket of oil. Today people are still riding round with their bucket of oil parroting “This is making us go faster!” while splashing it all over their poor horse.

Some people say the level of generative models doesn’t justify the hype but I disagree. The UX is behind the AI. Today when people say they work on multiple things in parallel what they usually mean is they’re switching tabs between multiple model convos and tabs of codex/claude code. That’s a productivity gain in that we produce more features than before but it feels like organising ten people with the constraint that you can only communicate by running right up to them. It’s a breathless way of working.

We don’t yet have the UX that lets us harness the speed potential. If we had better interfaces we could go much faster with the current models and would go much, much faster with the coming models. People are working hard on it of course. My plan for this blog is to collect and share the ideas that could make up the ‘100x UX’.

Starting with this wonderful, unhinged launch post from Steve Yegge for Gas Town where he answers the question: how can you have 30 Claude’s coding productively while maintaining an overview of what’s going on?

It’s an example of how fast someone can go with the right system. He built Gas Town in just over two weeks with 2200 commits from him (and his ‘Polecats’). The system has five types of agents: one to orchestrate, one to work, and three to keep all the work spinning. Plus the infrastructure around the agents to keep a record of the work and make sure it can be picked back up and built on.

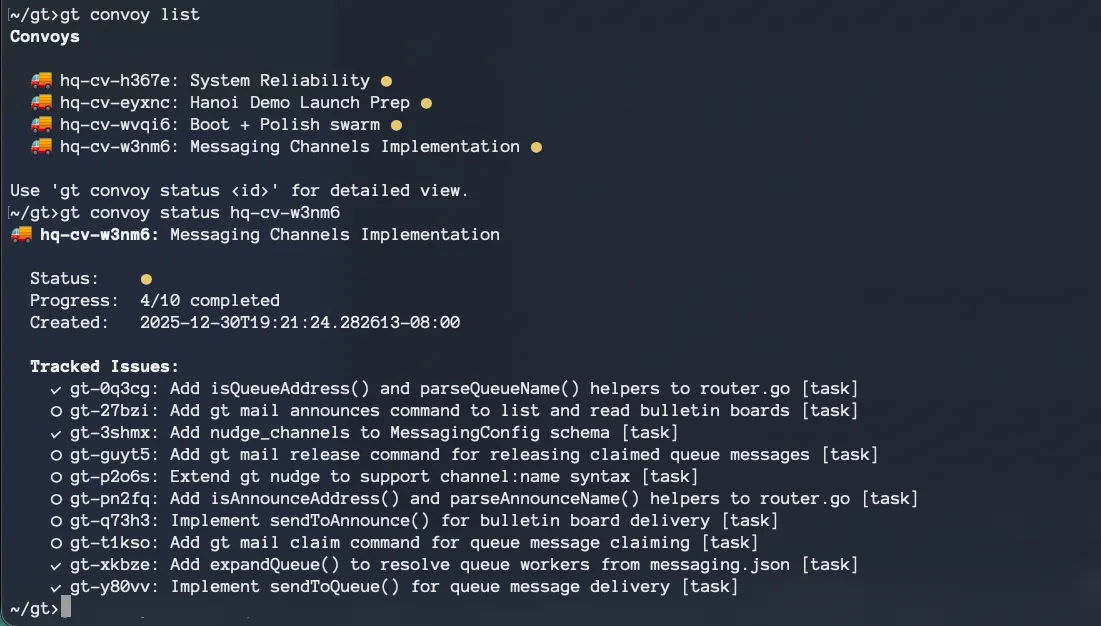

A Gas Town Convoy (source: Steve Yegge)

A Gas Town Convoy (source: Steve Yegge)

He had to do a lot of wrangling just to get his coding factory to run. Plus make his own memory system to overcome the context issues. LLM memory is a hot startup topic for a reason. It’s an open question whether it stays that way or the model providers absorb the problem into their systems.

The right UX for these tools is a design challenge to figure out an interface for parallelised work and an awkward technical challenge to get the current LLMs to do it. Because of this Gas Town is complicated. It’s hard to use and hard to understand because we don’t know how to do 100x productivity (someone helpfully struggled through using it for 3 hours if you want to see just how complicated). Despite that, Yegge’s design is one edge of what’s coming.

Update: Maggie Appleton has since reviewed Gas Town in detail (Gas Town’s Agent Patterns, Design Bottlenecks, and Vibecoding at Scale). My favourite question from her piece is how close should developers be to code written by orchestrated agents and how that affects the design of these systems.

Discuss